Web Caching, a quick guide

1 November, 2021

7 mins

Source: Unsplash by @alireza_attari

Source: Unsplash by @alireza_attariWelcome to our blog on web caching, where we embark on a journey to build lightning-fast web apps that stand out in the vast and competitive realm of the internet. In a world filled with abundant content and countless options for end-users, the key to winning their hearts lies in ensuring an exceptional user experience. No matter how remarkable your services may be, users today demand one vital feature above all else: an immediate response. They yearn for fast feedback and have grown increasingly impatient, giving your web app a mere 2-3 seconds to impress before moving on. As daunting as this may sound, we have the tools and techniques to tackle this challenge head-on.

The time taken for a page to load is result of so many factors like parsing and execution of JS scripts, loading of assets like(images, fonts, css), data fetching etc. By reducing page load time we have a way to improve the user experience and meet their expectations. One of the most powerful solutions at our disposal is caching, a technique that can significantly enhance response times and revolutionize the way users interact with our applications. So, why wait? Let's delve into the world of web caching and unleash the true potential of our web apps.

When it comes to web performance and app optimization, caching is one of the common solutions you cannot overlook, caching is something which is built into Browsers and HTTP requests and is natively available to the developers to integrate into our app without much configuration overhead, lets learn when & how to use it.

What is caching,

Take a look at your desk and you will find some commonly used items there, like pens, notepad, snack bars, water bottle, mobile charger etc. Since your desk has a limited space you only keep stuff which you find important and frequently used on it, while the other stuff you keep in cupboard or somewhere nearby, your fridge 😉.

So in above setup when you feel thirsty you don't always have to getup and walk to kitchen to drink water, you can simply grab the bottle on your desk. This definitely saves us time and effort.

Caching is also something similar, its concept when you store a copy of the frequently used data closest to your user to avoid the re-fetching. In caching we tend to identify data which we know won't change that often or data that is repeatedly accessed by user to near proximity of the access point that is our application. The data i.e. stored or copied during caching is know as cache.

A desk setup as a cache store is very contrived example but it helps with mental model. 🤷♂️

Its not only about response time, caching also help us with

- Performance, it reduces the latency between requests and response, and when using cached data for our pages, the startup time decreases

- it reduces the server load, request to server decreases(rpm)

- it also consumes less network bandwidth, cutting the cost

Types

Before moving on with the implementing caching lets understand where in our architecture we can have a cache store. Caching can be introduced at multiple levels of an app ecosystem,

- Browser cache : It is a cache system that’s built into a browser(memory cache or disk cache), it is available in the user system and is fastest and closest to user. Chrome

- Proxy Cache : In this caching methodology the cache is shared by multiple users sharing same proxy. When using proxy cache the network trip to origin(server) is avoided. The proxy server pulls the content from origin server once a while. Proxy Server, Reverse Proxy(CDNs)

- Application Cache : It is a cache stored at application level and data can be served directly from cache storage. It frees up compute resources. Redis, memcached, local storage

States

When the request is retrieved from the cache, we have a cache hit, whereas if the cache can’t fulfill the request, we have a cache miss. When we are using the stored data instead of fetching it on every usage, we should be aware of its state on the network

- Cold cache: A cold cache is empty and results in mostly cache misses

- Warm cache: The cache has started receiving requests and has begun retrieving objects and filling itself up.

- Hot cache: All cacheable objects are retrieved, stored, and up to date.

Working with the Caching

The browser inspects the headers of the HTTP response generated by the web server, to decide which request response it should cache in the system.

Expire

Expire header can be seen in many sites, it was introduced in HTTP 1.0 but it's not very common today,

- Expires have a expiration date after which the asset is considered invalid, this is an absolute value to all the clients

- As a date is used for validation, the cache life is dependent on the time zone of the user. Web server date should be in sync with client date

- After the expiration date, cache is not used and browser makes request to the server

- All the same resources for multiple clients will expire at same time and can result in DDOS.

Cache Control

Introduced in HTTP 1.1 which accepts comma-delimited string that outlines the specific rules, called directives. The Cache-control header allows you to set whether to cache or not and the duration of the cache.

public- can be stored on shared cache

private- Cache is intended for the single user, can't be store on shared cache (proxy servers)

no-store- Header value used is

no-storecache is not stored in browser or server under any condition - When the cache is missing or not used during the request.

- New Request is made every time

- Header value used is

no-cache- Header

no-cacheis used, cache is created at browser but considered stale and validation is required from server - New request is made every time for validation using ETags, if its not modified(304) then cache is used

- saves the bandwidth, but number of requests will remain same

- Header

max-age- in seconds, time for which cache will remain fresh

- Preferred over Expire header as it stores time in relative to request made.

- No time zone and DDOS attack issue as time is relative and not an absolute date

s-maxage- Used by the proxy

must-revalidate- Browser must re-validate and can't used stale cache

no-transform- Some CDNs have features that will transform images at the edge for performance gains, but setting the no-transform directive will tell the cache layer to not alter or transform the response in any way.

Before HTML5, meta tags were used inside HTML to specify cache-control. This is not encouraged now as only browsers will be able to parse the meta tag and understand it. Intermediate caches(proxy servers, cdns) won’t.

Validations

- ETag (Entity Tag)

- Etags are hash values associated with the request

- Multiple logic can be used for etags formation, like file size, file content etc.

- If etags are changed then cache is considered to be expired, and server sends latest data

- 304 response is sent in case Etag is not changed

- Last Modified

- Is a date timestamp value of content last modified, which can be used to decide whether the cache is valid or not.

- As date is involved content must be time stamped.

- Timestamp should be independent of zone.

- Files name usually changes when a site is built again, because of hash names. In this case, all files from previous build are considered expired and not used again by browser.

Out of the box solution to scale

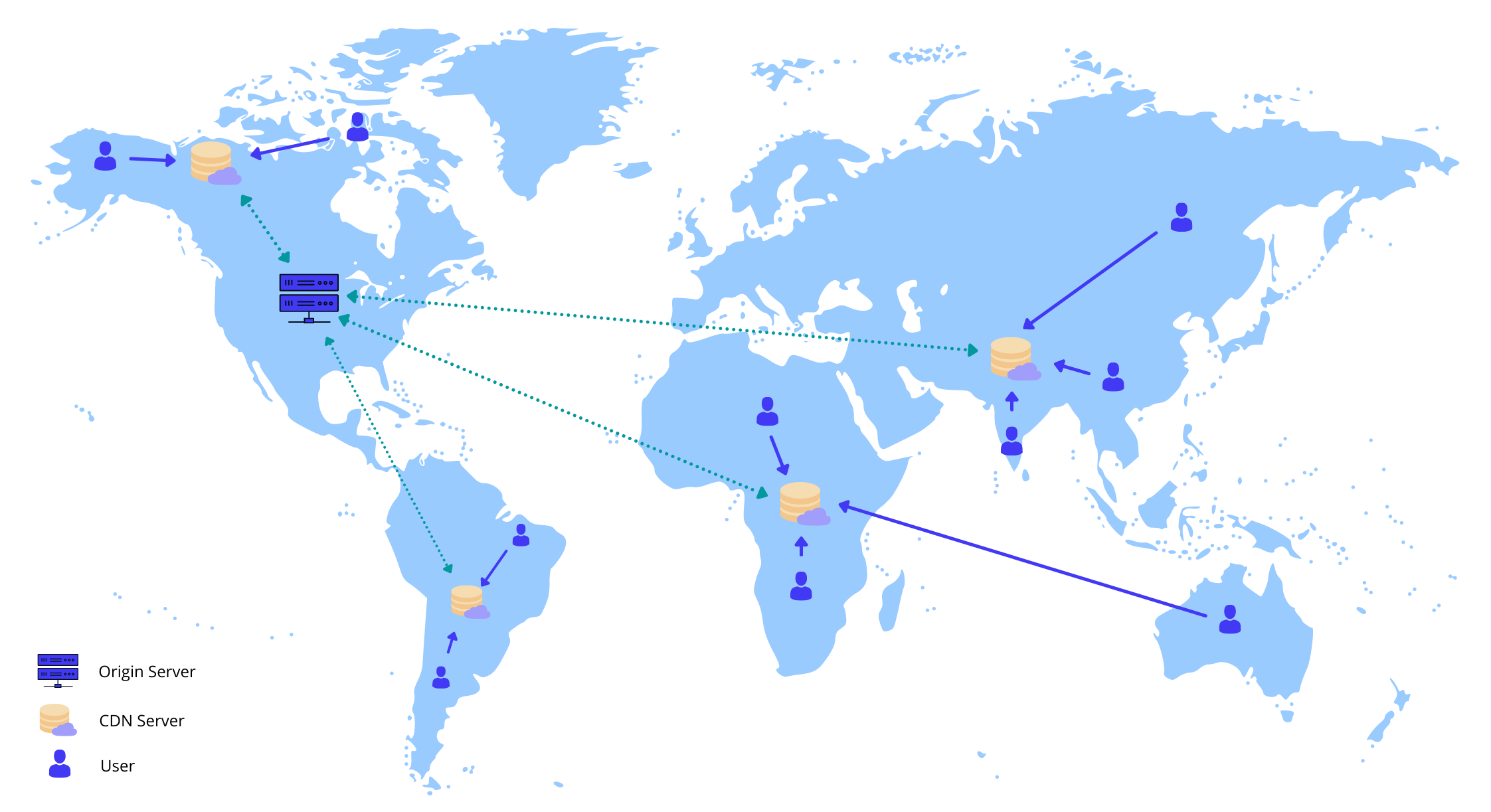

Since caching is such a powerful optimizations technique, web/app architecture have a layer for caching and this layer is occupied by Content Delivery Networks(CDN).

CDNs

A content delivery network (CDN) is a group of geographically distributed servers, also known as points of presence (POP). CDNs are used to cache static content closer to consumers. This reduces the latency between the consumer and the content or data needed

A CDN can achieve scalable content delivery by distributing load among its servers, by serving client requests from servers that are close to requesters, and by bypassing congested network paths.

- Distance reduction – reduce the physical distance between a client and the requested data

- Hardware/software optimizations – improve performance of server-side infrastructure, efficient load balancing, RAM and SSD are used to provide high-speed access to cached objects and

- Reduced data transfer – employ techniques such as minification and file compression to reduce file sizes so that initial page loads occur quickly. Smaller file sizes mean quicker load times.

- Security – keep a site secured with fresh TLS/SSL certificates which will ensure a high standard of authentication, encryption, and integrity. They also provide protection against DoS attack

CDN Cache Hit Ratio, this is the amount of traffic, both total bandwidth and sheer number of transactions, that can be handled by the cache nodes versus the number that gets passed back to your origin servers.

CDN offload = (offloaded responses / total requests)

Origin servers still have an important function to play when using a CDN, as important server-side code such as a database of hashed client credentials used for authentication, typically is maintained at the origin.

So know we know web caching comes at multiple layers and it help us improve our app performance and reduce operation cost. If your site uses static content then its a must to have solution for your web app. See how frequent and redundant those https calls are and accordingly add caching into your application arch. Lets give the user a better experience, Adios 👋

Resources

- Intelligent Caching by Tom Barker

- Cloudflare Learning

Blog

Blog